New commitment to deepen work on severe AI risks concludes AI Seoul Summit

Nations across the world have signed up to developing proposals for assessing AI risks over the coming months, in a set of agreements that bring the AI Seoul Summit to an end.

27 nations commit to work together on severe AI risks.

- NationsÔÇ»to work together on thresholds for severe AI risks, including in building biological and chemical weapons

- nations cement commitment to collaborate on AI safety testing and evaluation guidelines

- countries stress importance of innovation and inclusivity ahead of next Summit in France

The Seoul Ministerial StatementÔÇ»sees countries agreeing for the first time to develop shared riskÔÇ»thresholds for frontier AI development and deployment, including agreeingÔÇ»when model capabilities could pose ÔÇśsevere risksÔÇÖÔÇ»withoutÔÇ»appropriate mitigations. This could include helping malicious actors to acquire or use chemical or biological weapons, and AIÔÇÖs ability to evade human oversight,ÔÇ»for example by manipulation and deception or autonomous replication and adaptation.┬á

Countries have now set the ambition of developing proposals alongside AI companies, civil society, and academia for discussion ahead of the AI Action Summit which is due to be hosted by France. The move marksÔÇ»an important first step as part of a wider push to develop global standards to address specific AI ░¨ż▒▓§░ý▓§.╠ř╠ř

This follows a wide-ranging agreement to the ÔÇťFrontier AI Safety CommitmentsÔÇŁ, which were signed by 16 AI technology companies yesterday from across the globe includingÔÇ»the US, China, Middle EastÔÇ»and Europe ÔÇô a world first. ÔÇ»Unless they have already done so, leading AI developers will also now publish safety frameworks on how they will measure the risks of their frontier AI models, ahead of the AI Action Summit.ÔÇ»┬á┬á

Concluding the AI Seoul Summit, countries discussed the importance of supporting AI innovation and inclusivity. They recognised the transformative benefits of AI for the public sector, and committed to supporting an environment which nurtures easy access to AI-related resources for SMEs, start-upsÔÇ»and academia. They also welcomed the potential for AI to provide significant advances in resolving the worldÔÇÖs greatest challenges, such as climate change, global health, foodÔÇ»and energy security.

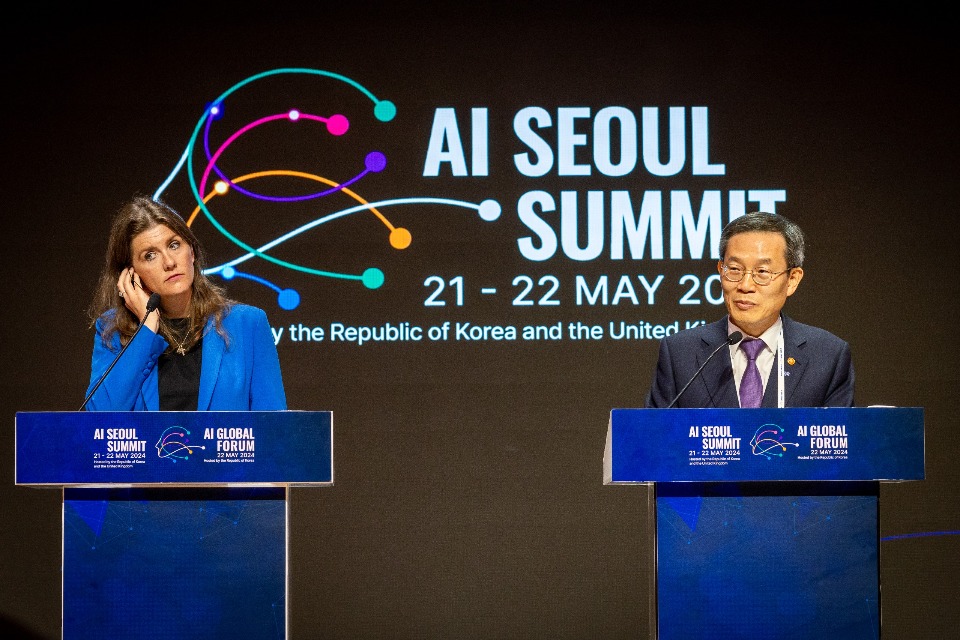

UK Technology Secretary Michelle DonelanÔÇ»said:ÔÇ»┬á┬á

It has been a productive two days of discussions which the UK and the Republic of Korea have built upon the ÔÇśBletchley EffectÔÇÖ following our inaugural AI Safety Summit which I spearheaded six months ago.ÔÇ»┬á┬á

The agreements we have reached in Seoul mark the beginning of Phase Two of our AI Safety agenda, in which the world takes concrete steps to become more resilient to the risks of AI and begins a deepening of our understanding of the science that will underpin a shared approach to AI safety in the future.ÔÇ»┬á┬á

For companies, it is about establishing thresholds of risk beyond which they wonÔÇÖt release their models. For countries, we will collaborate to set thresholds where risks become severe. The UK will continue to play the leading role on the global stage to advance these conversations.

Minister Lee Jong-Ho of the Ministry of Science and ICT of the Republic of Korea said:

Through this AI Seoul Summit, 27 nations and the EU have established the goals of AI governance as safety, innovation and inclusion. In particular governments, companies, academia, civil society from various countries have together advanced to strengthen global AI safety capabilities and explore an approach on sustainable AI ╗ň▒▒╣▒▒˘┤ă▒Ŕ│ż▒▓ď│┘.╠ř╠ř╠ř

We will strengthen global cooperation among AI safety institutes worldwide and share successful cases of low-power AI chips to help mitigate the global negative impacts on energy and the environment caused by the spread of AI.╠ř╠ř

We will carry forward the achievements made in RoK. and UK to the next summit in France, and look forward to minimizing the potential risks and side effects of AI while creating more opportunities and benefits.

Nations have also pledged to boost international cooperation on the science of AI safety. Following on from its interim publication in the run up to the AI Seoul Summit, the International Scientific Report on the Safety of Advanced AI will continue to underpin this shared approach to AI safety science. States have resolved to support future reports on AI risk and looked forward to the next iteration of the Report, due to be published in time for the AI Action Summit. The Report is designed to facilitate a shared science-based understanding of the risks associated with frontier AI among global policymakers, and to sustain that understanding as capabilities continue to increase, helping support safe AI ż▒▓ď▓ď┤ă▒╣▓╣│┘ż▒┤ă▓ď.╠ř

The discussions at the AI Seoul Summit and NovemberÔÇÖs talks at Bletchley Park continue to drive forward the global focus on AI safety, innovation, and inclusivity. Governments, academia, and the wider AI community will now prepare for the AI Action Summit in France, to once again address how the benefits of this generation-defining technology can be realised across the globe.

Notes to editors

Read Seoul Ministerial Statement here.

Signatories of todayÔÇÖs Seoul Ministerial Statement are:

Australia, Canada, Chile, France, Germany, India, Indonesia, Israel, Italy, Japan, Kenya, Mexico, Netherlands, Nigeria, New Zealand, The Philippines, Republic of Korea, Rwanda, Kingdom of Saudi Arabia, Singapore, Spain, Switzerland, Türkiye, Ukraine, United Arab Emirates, United Kingdom, United States of America, and the European Union.

Countries have agreed that ÔÇťsevere risks could be posedÔÇŁ by the potential frontier AI capability to meaningfully assist ÔÇťnon-state actors in advancing the development, production, acquisition or use of chemical or biological weaponsÔÇŁ, noting the importance of acting consistently with relevant international law such as the Chemical Weapons Convention and Biological and Toxin Weapons Convention.

Countries have also recognised that severe risks could be posed by the potential frontier AI capability ÔÇťto evade human oversightÔÇŁ including through ÔÇťmanipulation and deception or autonomous replication and adaptationÔÇŁ andÔÇ»committed to working with companies to implement appropriate safeguards.

China participated in-person at the AI Seoul Summit, with representatives from government, civil society, academia and top companies in attendance, building on the approach that started at Bletchley. China engaged in todayÔÇÖs discussions on AI safety which covered areas of collaboration ahead of the France AI Action Summit, and we look forward to continued engagement with China on this work.